1. Introduction

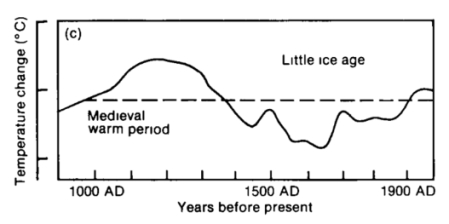

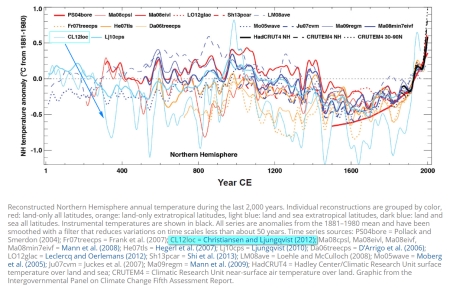

When we speak of global warming, the following picture is practically omnipresent:

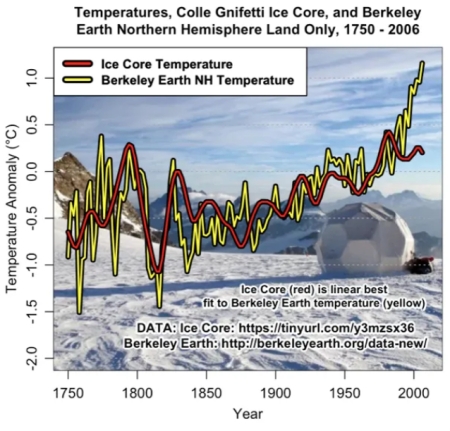

It presents the global temperature anomaly (i.e. the difference of the actual yearly temperature with the average from 1961-1990) as given by the 3 most known temperature reconstructions of GISS (= NASA), HADCRUT4 (England) and BERKELEY (Berkeley BEST project, USA). These series more or less agree for the last 50 years, but nevertheless show visible difference for the preceding 50 to 70 years. The data used are those from known weather stations, but also from proxies like treerings, ice cores etc. What is rarely mentioned, is that during the late 19th and the beginning 20th century there were many famous scientists who worked on the same problem: find global mean yearly temperatures according to the latitudes (the so-called zonal temperatures) and/or find the global yearly isotherms which were known not to coincide with the latitude circles. Many of these ancient researchers like von Hann and von Betzold were from Germany and published in German. This may explain the poor interest shown in these papers by “modern” researchers.

This situation has some similarities with the reconstructions of global CO2 levels. Here also mostly ice-cores or other proxies are used, and the papers from the 19th century scientists which made real CO2 measurements with chemical methods are often belittled. The late Ernst-Georg BECK (a chemistry and biology German teacher) made an outstanding effort to find and evaluate these old measurements, and found that these values were much more variable as told by the “consensus” climatology. I wrote with Beck a paper published in 2009 by Springer on how to try to validate these old measurements, of which there were not many and their focus typical local (link).

2. The KRAMM et al. paper

Gerard Kramm from Engineering Meteorological Consulting in Fairbanks and his co-authors (Martina Berger, Ralph Dlugi from the German Arbeitsgruppe Atmophärische Prozesse. Munich, and Nicole Mölders, University of Alaska Fairbanks) have published in Natural Science, 2020 (link) a very important paper on how researchers from the old times calculated zonal, hemispheric and global annual temperatures. The very long title is “Meridional Distributions of Historical Zonal Averages and Their Use to Quantify the Global and Spheroidal Mean Near-Surface Temperature of the Terrestrial Atmosphere“, and this 45 page paper is a blockbuster. It contains it’s fair share of mathematics, and I had to read it several times to understand the finer points. I first stumbled on that paper from a discussion at the NoTricksZone blog (link), and you might well first reading the comment of Kenneth Richard.

The 4 authors all seem German speaking people, what explains that many citations are given in its original language. They tell us that very famous scientists of the second half of the 19th and the start of the 20th century worked to find global average temperatures. One must remember that in 1887 for instance 459 land based meteorological stations (outside the USA and the polar regions) and about 600 vessels gathered meteorological data; the first Meteorological Congress held in 1873 in Vienna had standardized the equipment (for instance of dry and moist thermometers). The best known authors of big climate treaties written in the 1852-1913 time span are von Hann ( Julius-Ferdinand von Hann, 1839 – 1921 ) and von Betzold (Wilhelm von Betzold, 1837 – 1907 ), who referred to numerous other authors.

The Kramm paper tries to validate the results given by these authors, using papers from other authors and mathematical calculations.

Just to show how good the results of these authors were, look at the following extract of a graph from von Hann (1887) showing the zonal isotherms over the whole globe. I have added the text boxes:

The yellow dot shows the approximate location of Diekirch, slightly south of the 10°C isotherm. The yellow box shows that the mean temperature measured by meteoLCD was 10.6°C over the 21 years period 1998 – 2019, very close to the von Hann isotherm of 1887.

The authors write that “obviously the results of well-known climate researchers ….are notably higher than those derived from Hadcrut4, Berkeley and Nasa GISS“. So the question is have these institutions (willingly or not) lowered the temperatures of the past and so amplified the global warming?

(to be continued)